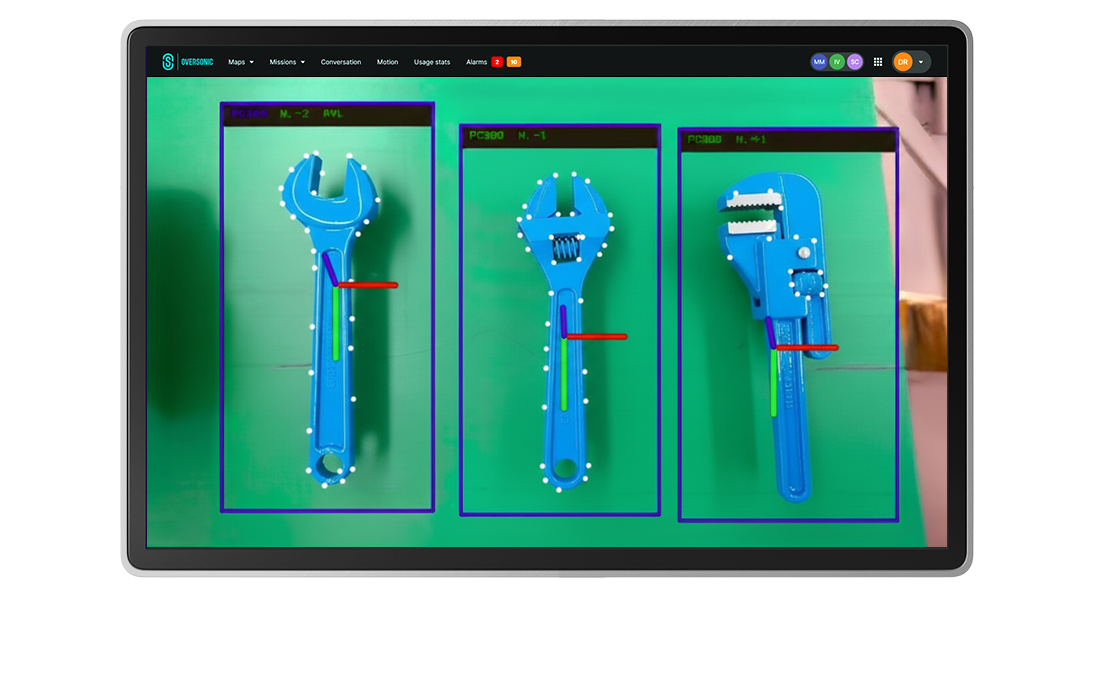

The module combines depth cameras, LiDAR, and proprietary neural networks to deliver real-time 3D understanding of the robot's surroundings. Operating at up to 30 frames per second with millimeter-level pose accuracy, it recognizes and tracks objects, people, and body movements even in dynamic environments.

Core capabilities include 3D object pose estimation, human recognition and re-identification, gesture and movement tracking, and defect detection. With support for multiple sensor types — RGB-D, infrared, and thermal — the system ensures robust perception under varying lighting and environmental conditions.

All data is processed in compliance with GDPR standards, with optional secure cloud integration for large-scale training.

| ➔ | Object & human recognition Identifies tools, parts, and people in real time. |

|---|---|

| ➔ | Body movement tracking Detects gestures and ergonomic risks. |

| ➔ | Defect detection Measurement, surface analysis, performance validation. |

| ➔ | Semantic scene mapping Contextual understanding for navigation. |

| Video resolution | 1280×720 px |

| Pose detection frequency | 30 Hz |

| Minimum detectable object diameter | 30 mm |

| Minimum lighting conditions | ≥ 300 lx |

| Computing power |

|---|

| Edge (Nvidia GPU), cloud |

| Compatible cameras |

|---|

| Realsense, Luxonis, Teledyne |

| Facial recognition | |

|---|---|

| Max. detection distance | 1.5 m |

| Minimum dwell time | 1 s |

| Anti-spoofing | Supported |

| Embedding sharing | GDPR compliant |

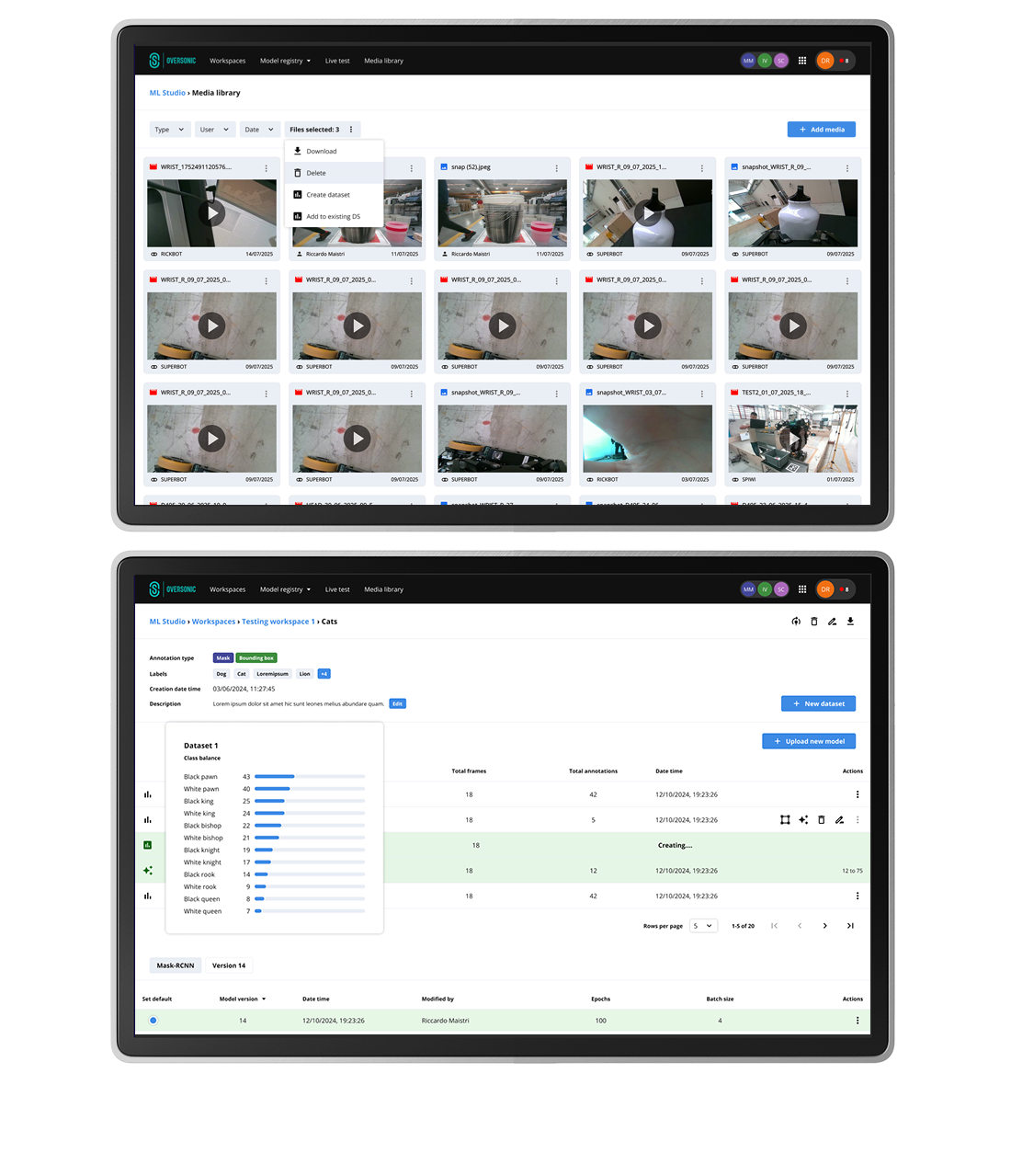

ML Studio.

ML Studio is a comprehensive machine learning pipeline platform that manages the entire computer vision workflow from data collection to model deployment. The system orchestrates data annotation, model training, validation, and auto-annotation processes through an integrated serverless architecture.

Built around Label Studio integration and Google Vertex AI compute resources, it enables scalable development of vision-based AI models for robotic applications.

The platform supports multiple annotation types including segmentation masks, keypoints, and bounding boxes, with specialized tools for dataset preprocessing and automated keypoint generation from geometric shapes.

| ➔ | Complete ML pipeline End-to-end workflow management covering data collection, annotation, training, model registry, and deployment with integrated version control for trained models. |

|---|---|

| ➔ | Integrated annotation system Label Studio integration providing professional-grade annotation tools with support for masks, keypoints, bounding boxes, and ellipses. |

| ➔ | Dataset creation Auto-annotation system using pre-trained models and specialized tools for keypoint generation from geometric shapes, AprilTag detection, and perspective transformation. |

| ➔ | Project management Workspace-based organization with project-level configuration management supporting multiple datasets per project and consistent training parameter inheritance. |

ML Studio streamlines the development of computer vision models for robotic applications by providing an integrated platform that reduces the complexity of dataset creation, annotation, and model training while maintaining professional-grade capabilities for production deployment.

Contact us for more details

| Serverless cloud-native architecture |

| Google Vertex AI compute backend |

| Label Studio open-source integration |

| Mask R-CNN and Keypoint R-CNN model support |

| Multi-format annotation capabilities (masks, keypoints, bounding boxes) |

| COCO model auto-annotation compatibility |

| AprilTag-based 3D pose integration |

| Configurable data augmentation pipeline |

| Video frame downsampling support |

| Early stopping validation methods |

| Versioned model registry |

| Cross-project dataset management |